1.为了增加多层感知器模型的准确率,将隐藏层原本256个神经元改为1000.

model.add(Dense(units=1000,input_dim=784,kernel_initializer=’normal’,activation=’relu’))

2.查看模型的摘要

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

dense_1 (Dense) (None, 1000) 785000

_________________________________________________________________

dense_2 (Dense) (None, 10) 10010

=================================================================

Total params: 795,010

Trainable params: 795,010

Non-trainable params: 0

_________________________________________________________________

None

3.开始训练

从指令执行后的屏幕显示界面中可以看到共执行来10个训练周期,从中可以发生误差越来越小,准确率越来越高

Epoch 1/10

– 6s – loss: 0.2890 – acc: 0.9179 – val_loss: 0.1483 – val_acc: 0.9578

Epoch 2/10

– 6s – loss: 0.1154 – acc: 0.9660 – val_loss: 0.1021 – val_acc: 0.9683

Epoch 3/10

– 6s – loss: 0.0718 – acc: 0.9798 – val_loss: 0.0885 – val_acc: 0.9733

Epoch 4/10

– 6s – loss: 0.0499 – acc: 0.9865 – val_loss: 0.0863 – val_acc: 0.9748

Epoch 5/10

– 6s – loss: 0.0363 – acc: 0.9893 – val_loss: 0.0789 – val_acc: 0.9764

Epoch 6/10

– 6s – loss: 0.0259 – acc: 0.9935 – val_loss: 0.0727 – val_acc: 0.9773

Epoch 7/10

– 6s – loss: 0.0180 – acc: 0.9956 – val_loss: 0.0736 – val_acc: 0.9773

Epoch 8/10

– 6s – loss: 0.0131 – acc: 0.9975 – val_loss: 0.0715 – val_acc: 0.9786

Epoch 9/10

– 6s – loss: 0.0091 – acc: 0.9986 – val_loss: 0.0694 – val_acc: 0.9800

Epoch 10/10

– 6s – loss: 0.0068 – acc: 0.9991 – val_loss: 0.0747 – val_acc: 0.9784

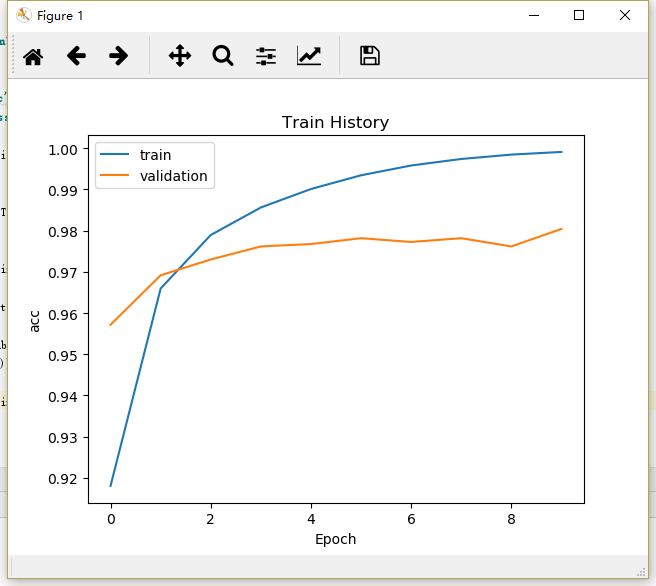

4.查看训练过程的准确率

在执行结果界面,总共执行来10个训练周期,我们可以发现:

无论是训练还是验证,准确率都越来越好

在Epoch训练后期,”acc训练的准确率”比”val_acc验证的准确率”高,过度拟合更加严重

5.预测准确率

32/10000 […………………………] – ETA: 1s

480/10000 [>………………………..] – ETA: 1s

1216/10000 [==>………………………] – ETA: 0s

1984/10000 [====>…………………….] – ETA: 0s

2720/10000 [=======>………………….] – ETA: 0s

3488/10000 [=========>………………..] – ETA: 0s

4224/10000 [===========>………………] – ETA: 0s

4992/10000 [=============>…………….] – ETA: 0s

5792/10000 [================>………….] – ETA: 0s

6560/10000 [==================>………..] – ETA: 0s

7168/10000 [====================>………] – ETA: 0s

7744/10000 [======================>…….] – ETA: 0s

8480/10000 [========================>…..] – ETA: 0s

9280/10000 [==========================>…] – ETA: 0s

9952/10000 [============================>.] – ETA: 0s

10000/10000 [==============================] – 1s 72us/step

accuracy= 0.9823