(1)数据集生成

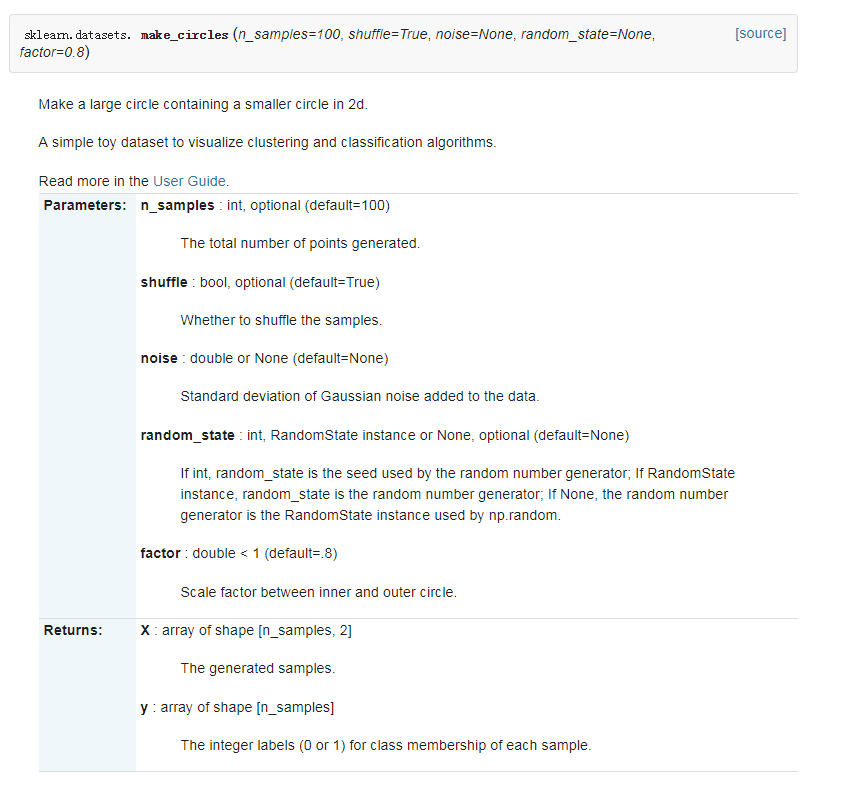

data, features = make_circles(n_samples=N, shuffle=True, noise= 0.12, factor=0.4)

(2)模型结构

这里面的变量除了存放原始数据,还有一个列表,用来存放为每个测试数据预测的测试结果

(3)损失函数描述

在聚类问题中,我们使用的距离描述跟前面一样,都是欧几里得距离,在每一个聚类的循环中,计算测试点与每个存在的训练点之间的距离,找到最接近那个训练点的索引,使用该索引寻找最近邻的点的类

distances = tf.reduce_sum(tf.square(tf.subtract(i , tr_data)),reduction_indices=1) neighbor = tf.arg_min(distances,0)

(4)停止条件

本例中,当处理完测试集中所有的样本后,整个过程结束

import tensorflow as tf

import numpy as np

import time

import matplotlib

import matplotlib.pyplot as plt

from sklearn.datasets.samples_generator import make_circles

N=210 #数量

K=2 #簇

# Maximum number of iterations, if the conditions are not met

MAX_ITERS = 1000 #最大迭代次数

cut=int(N*0.7) #把数据集分割为30% 70%

start = time.time()

data, features = make_circles(n_samples=N, shuffle=True, noise= 0.12, factor=0.4) #生成数据集

tr_data, tr_features= data[:cut], features[:cut] #70%

te_data,te_features=data[cut:], features[cut:] #30%

fig, ax = plt.subplots()

ax.scatter(tr_data.transpose()[0], tr_data.transpose()[1], marker = 'o', s = 100, c = tr_features, cmap=plt.cm.coolwarm )

plt.plot()

plt.show()

points=tf.Variable(data) #TF声明

cluster_assignments = tf.Variable(tf.zeros([N], dtype=tf.int64)) #初始化数组

sess = tf.Session()

sess.run(tf.initialize_all_variables()) #完成初始化

test=[]

for i, j in zip(te_data, te_features):

distances = tf.reduce_sum(tf.square(tf.subtract(i , tr_data)),reduction_indices=1)

neighbor = tf.arg_min(distances,0)

#print tr_features[sess.run(neighbor)]

#print j

test.append(tr_features[sess.run(neighbor)])

print(test)

fig, ax = plt.subplots()

ax.scatter(te_data.transpose()[0], te_data.transpose()[1], marker = 'o', s = 100, c = test, cmap=plt.cm.coolwarm )

plt.plot()

plt.show()

#rep_points_v = tf.reshape(points, [1, N, 2])

#rep_points_h = tf.reshape(points, [N, 2])

#sum_squares = tf.reduce_sum(tf.square(rep_points - rep_points), reduction_indices=2)

#print(sess.run(tf.square(rep_points_v - rep_points_h)))

end = time.time()

print ("Found in %.2f seconds" % (end-start))

print("Cluster assignments:", test)